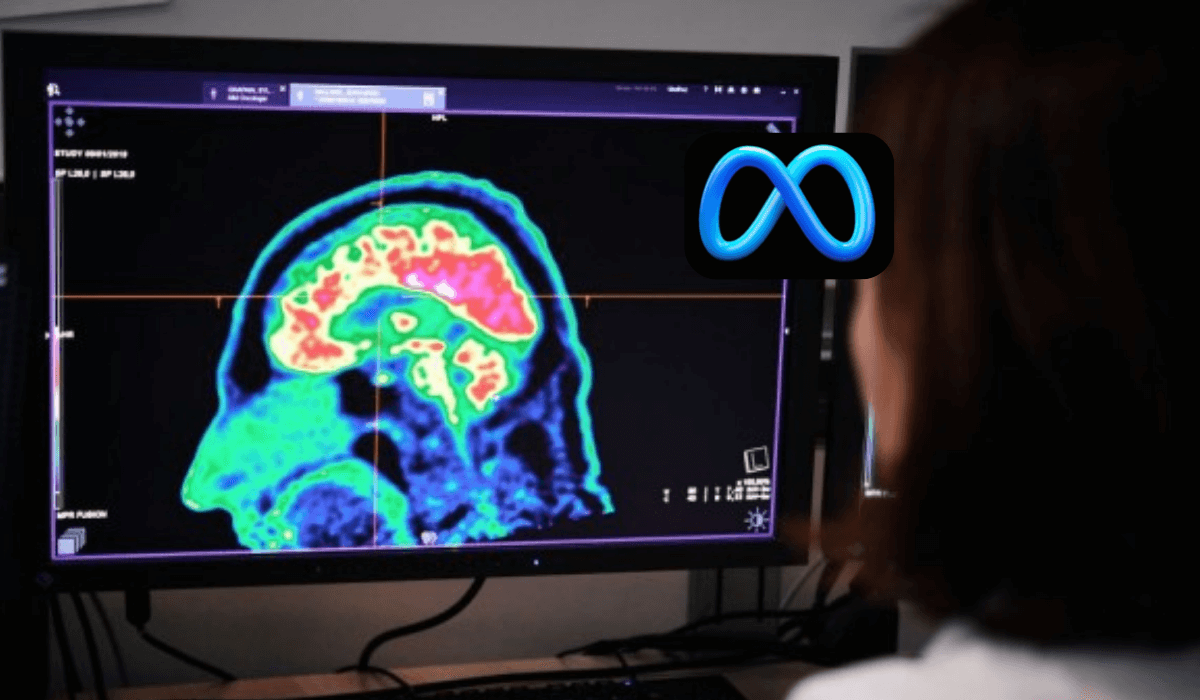

Meta researchers are designing an AI that can peak at human brainwaves. This AI (Artificial Intelligence) can hear what a person hears by studying his brainwaves.

On August 31, Meta announced that they are developing an AI. According to experts, they are designing a new way to comprehend what is in people’s minds.

According to the most recent news, research is still in its beginning stages. In addition, it’s developed to be a starting point to assist people with brain injuries. Who cannot communicate through typing or speaking. In this case, researchers are trying to track the brain’s activity without invasively probing the brain using electrodes, which requires surgery.

The Reports show that the Meta Algorithm study looked at 169 adult participants who heard stories and sentences and read aloud, as the researchers recorded their brain activity with different devices. Then the Scientist fed this data to the AI model to find the patterns. They wanted the algorithm to “hear” or determine what people were listening to the light of the magnetic and electrical activity in their brains.

Uses of this Technology

Meta has no primary intention to turn this technology into a commercial product. It can be a first step toward reading nonverbal people’s thoughts to enable them to convey them more effectively.

Jean Remi King, a researcher in the Facebook Artificial Intelligence Research (FAIR) Lab, can be particularly beneficial to people who suffer from various ailments that affect communication, including brain injuries that cause trauma and Anoxia.

Upcoming Challenges

Jean discovered that one of the significant challenges they are facing now is the noisy signals we are getting. Here they didn’t have a direct connection with the brain. They didn’t need to put an electrode in the brain. The skin and skull corrupt the signals and make them noisy, so they must build advanced sensors.

The second issue is how they decode the brainwaves into readable language.

So the Fair is working to overcome these challenges.